Accreditation period Units 1 and 2 from 2025; Units 3 and 4 from 2025

General assessment advice

Advice on matters related to the administration of Victorian Certificate of Education (VCE) is published annually in the

VCE Administrative Handbook.

Updates to matters related to the administration of VCE assessment are published in the

VCAA Bulletin.

Subscribe to the VCAA Bulletin.

Teachers must refer to these publications for current advice.

The VCE assessment principles underpin all VCE assessment practices and should guide teachers in their design and implementation of School-assessed Coursework (SACs) and School-assessed Tasks (SATs). When developing SAC and SAT tasks, teachers should also refer to the VCAA policies and school assessment procedures as specified in the VCE Administrative Handbook section: Scored assessment: School-based Assessment.

The VCAA assessment principles determine that assessment of the VCE should be:

- valid and reasonable

- equitable

- balanced

- efficient.

Essentially, these principles invite schools and teachers to create assessment practices, including tasks and tools, that enable students to demonstrate their knowledge and understanding of the outcome statements, and the key knowledge and key skills through a range of opportunities and in different contexts (balanced), that do not advantage or disadvantage certain groups of students on the basis of circumstances and contexts (equitable), that are not overly onerous in terms of workload and time (efficient) and that only assess that which is explicitly described in the study design.

The

glossary of command terms provides a list of terms commonly used across the Victorian Curriculum F–10, VCE study designs and VCE examinations and to help students better understand the requirements of command terms in the context of their discipline.

VCE Applied Computing Study Design examination specifications, past examination papers and corresponding examination reports can be accessed from the

VCE examination webpages.

Graded Distributions for Graded Assessment can be accessed from the

VCAA Senior Secondary Certificate Statistical Information webpage.

Excepting third-party elements, schools may use this resource in accordance with the

VCAA’s Educational Allowance (VCAA Copyright and Intellectual Property Policy).

For Units 1–4 assessment tasks should be a part of the regular teaching and learning program and should not add unduly to student workload. Students should be clearly informed of the timelines and the conditions under which assessment tasks are to be conducted, including whether any resources are permitted.

Points to consider in developing an assessment task:

- List the relevant content from the areas of study and the relevant key knowledge and key skills for the outcomes.

- Develop the assessment task according to the specifications in the study design. It is possible for students in the same class to undertake different tasks, or variations of components for a task; however, teachers must ensure that the tasks or variations are comparable in scope and demand.

- Identify the qualities and characteristics that you are looking for in a student response and map these to the criteria, descriptors, rubrics or marking schemes being used to assess level of achievement.

- Identify the nature and sequence of teaching and learning activities to cover the relevant content, and key knowledge and key skills outlined in the study design, and to provide for different learning styles.

- Decide the most appropriate time to set the task. This decision is the result of several considerations including:

- the estimated time it will take to cover the relevant content from the areas of study and the relevant key knowledge and key skills for the outcomes

- the possible need to provide preparatory activities or tasks

- the likely length of time required for students to complete the task

- when tasks are being conducted in other studies and the workload implications for students.

Units 1 and 2

All assessments for Units 1 and 2 are school-based. The determination of a satisfactory (S) or not satisfactory (N) for each of Units 1 and 2 is a separate consideration from the assessment of levels of achievement. This distinction means that a student can receive a very low numerical score in a formal assessment task but still achieve an S for the outcome.

The decision about satisfactory completion of outcomes is based on the teacher’s judgment of the student’s overall performance on a combination of set work and assessment tasks related to the outcomes. Students should be provided with multiple opportunities across the learning program to develop and demonstrate the key knowledge and key skills required for the outcomes for the unit. If a student, in the judgment of the teacher, did not meet the required standard for satisfactory completion of the outcome through the completion of the set work and assessment task(s) then they should be afforded additional opportunities to demonstrate the outcome through submitting further evidence; for example, a teacher may consider work previously submitted (class work, homework), additional tasks or discussions with the student that demonstrate their achievement of the outcome (i.e. a student can demonstrate their understanding in a different language mode, such as through speaking rather than writing) as further evidence provided it meets the requirements and is consistent with the established school processes.

Procedures for assessment of levels of achievement in Units 1 and 2 are a matter for schools to decide. Schools have flexibility in deciding how many and which assessment tasks they use for each outcome, provided that these decisions are in accordance with VCE Applied Computing Study Design and VCE Assessment principles

Teachers should note the cognitive demand of the command terms in the outcome statements to determine the type of teaching and learning activities and evidence of student understanding that will be needed for students to demonstrate satisfactory completion of each outcome.

Units 3 and 4: Data analytics – School-assessed Task

When designing learning activities for the School-assessed Task, teachers will refer to the problem-solving methodology specifications, and the areas of study and outcomes, including key knowledge and key skills, as listed in the VCE Applied Computing Study Design.

The following table gives a breakdown of the four stages of the problem-solving methodology for the School-assessed Task.

Unit 3 Outcome 2

| Unit 4 Outcome 1

|

|---|

|

Analysis |

Design |

Development |

Evaluation |

Project management - Create, monitor and modify project plans (Gantt chart)

|

Project management - Monitor, modify and annotate project plans

- Assess the effectiveness of the project plan

|

Analysis - Propose a research question

- Analyse and document solution requirements, constraints and scope

- Searching, collecting, referencing and managing data sets

|

Design - Generate design ideas

- Develop evaluation criteria

- Produce detailed designs

|

Development - Conduct statistical analysis

- Secure data

- Develop infographics and/or dynamic data visualisations

- Validate, verify and test

|

Evaluation - Evaluate the efficiency and effectiveness of the infographics and/or dynamic data visualisations

|

The Data Analytics School-assessed Task is in two parts involving Unit 3 Outcome 2 and Unit 4 Outcome 1.

Throughout the School-assessed Task students will develop their critical and creative thinking, communication, personal and digital literacy skills.

The School-assessed Task contributes 30 per cent to the study score for Data Analytics. Details of the assessment task can be found on page 51 of the

VCE Applied Computing Study Design.

Teachers must be aware of the current VCE Applied Computing: Data Analytics Administrative information for School-based Assessment. This document contains assessment information on the nature and scope of the task, mandated assessment rubrics and authentication information, including forms and School-based Assessment Task assessment sheets for scores. Teachers are reminded of the need to comply with the authentication requirements specified in the Assessment: School-based Assessment section of the current

VCE Administrative Handbook. This is important to ensure that undue assistance is not provided to students undertaking assessment tasks.

Teachers must plan and use observations of student work in order to monitor and record each student’s progress as part of the authentication process. A record of these details are to be included on the Authentication record form in the

VCE Applied Computing: Data Analytics: Administrative information for School-based Assessment.

The VCAA conducts professional learning for teachers of Data Analytics in February and March of each year through webinars. These are known as SAT Webinars. Details of these professional learning sessions are advertised in the November edition of the

VCAA Bulletin each year.

Unit 3: Area of Study 2 – Data analytics: analysis and design

The first part of the SAT requires students to propose a research question that will be developed into infographics and/or dynamic data visualisations to present their findings. Students should present their ideas to the teacher for a discussion to ensure that the infographics and/or dynamic data visualisations can be feasibly completed within the timeframe available. It is also necessary for students to document their thinking to ensure that both they and their teacher understand the tasks they are expected to complete and the methods and techniques they will use to achieve them.

For example, students could provide a statement of intent about their research question and the overall project. Teachers should not provide individual students or classes with research questions; students must generate their research question themselves.

Students will produce a project plan (Gantt chart) that outlines the tasks, sequencing, time allocation, dependencies, milestones and the critical path. They will follow the project plan to develop their infographics and/or dynamic data visualisations for their research question. The project plan takes into consideration all stages and activities of the problem-solving methodology covered in Unit 3 Outcome 2 and Unit 4 Outcome 1.

Once the project plan has been developed it needs to be monitored and modified throughout the entire project (both Unit 3 and Unit 4). Students do not have to use dedicated project-management software in the development of their project plan.

Below is a sample of a project plan.

The analysis stage of the problem-solving methodology requires students to propose a research question and to collect and analyse data. Students are required to collect data that will inform the analysis of their research question. Data collection should include a range of methods and techniques, including interviews, observation, querying of data stored in large repositories and surveys.

Teachers should encourage students to adopt the best possible approach when creating their research question. The question should be researchable within the timeframe available and allow for an analytical response rather than a descriptive one. A helpful guide is provided on the

Monash University website.

Students document their analysis to clearly outline the use of data to support the research question for the proposed infographics and/or dynamic data visualisations. They should include a statement of functional and non-functional requirements, constraints and scope.

The example below is a sample process for developing a research question with the supporting documentation.

Research question: Should the Australian Government promote a greater reliance on renewable energy to reduce Australia’s contribution to climate change?

Functional requirements

- Data requirements

- Primary data – surveys and interviews with peers, focus groups or expert

- Secondary data – data from large repositories

- Infographics/dynamic data visualisations requirements

- Data is simplified and presented as information using graphics

- Users can interact with dynamic data visualisations

- Users can view data changing over time

Non-functional requirements

- Ability for viewer to understand the message being conveyed

- Accessibility

- Use of different devices/screen sizes/operating systems

- Understanding the needs of visually impaired people and incorporating some text/audio findings, as well as using colours that do not regularly impact those with colour-blindness

Constraints

- Economic

- Time constraints: due dates

- Limitations of data visualisation web-sites with free access rights

- Technical

- Availability of equipment: labs, laptops, Wi-Fi

- Security – use of cloud and network infrastructure

- Social

- Level of expertise of users: need for intuitive interface for dynamic data visualisations

- Legal

- Protecting privacy of those providing data: primary sources

- Ownership of data: referencing secondary sources using the APA method

- Usability

- Ease of use of solution: link with social constraint about intuitive interface

- Usefulness: ensure that all features of making a data visualisation interactive improves the user’s experience

Scope

- Included in this solution:

- Timeframe (from 2000–2024 – and beyond using forecasts)

- Countries (Australia and several relevant comparisons – USA, India, Canada, China)

- Historical per-capita and gross production of CO2 emissions

- Technology (restricted to currently implemented power usage technologies such as coal, gas, coal seam gas (CSG), photovoltaic solar, thermal and nuclear as appropriate per nation)

- Impact of global agreements on CO2 emissions (Kyoto Protocol, Paris Agreement)

References

- Need to follow the APA referencing style.

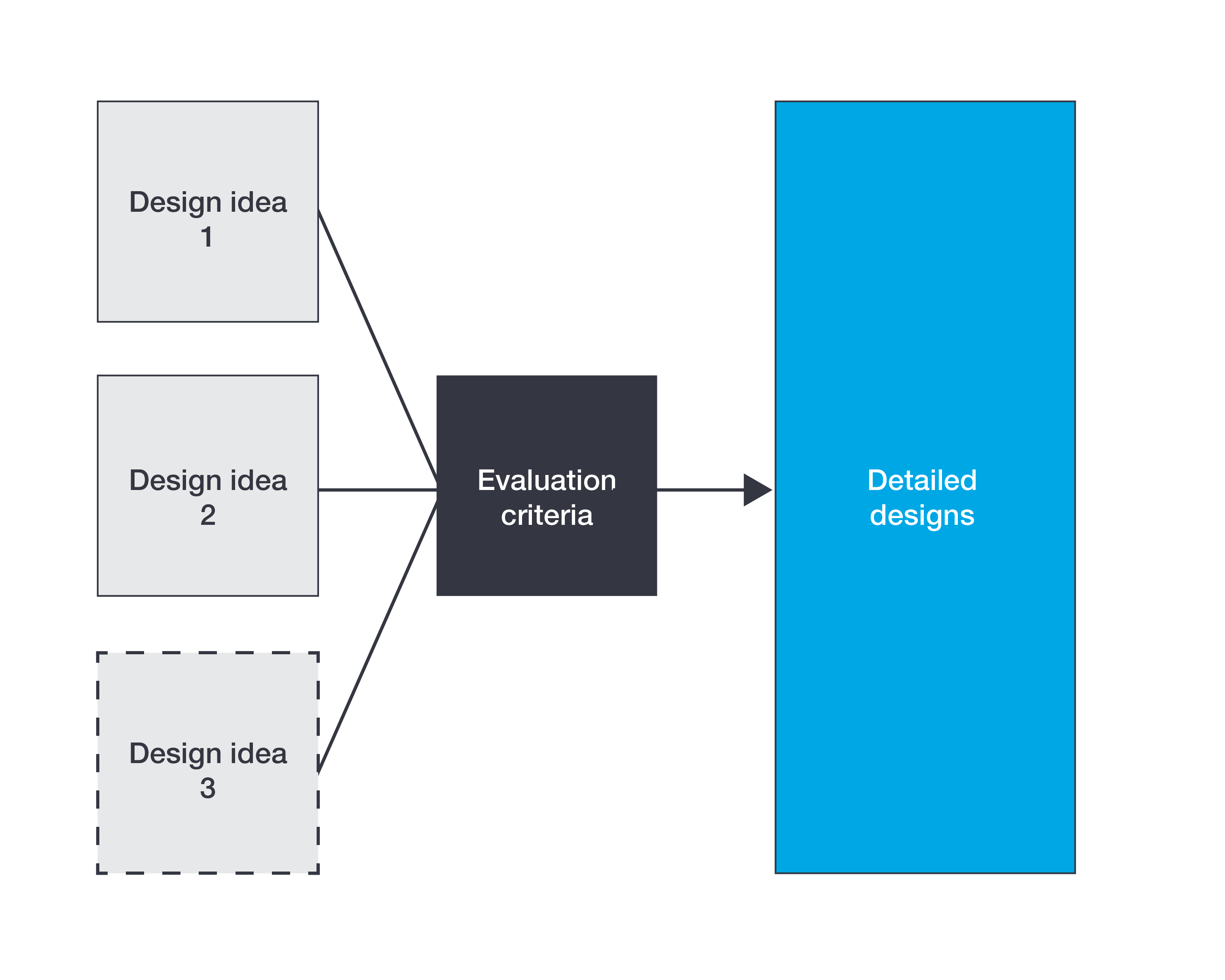

The design stage of the problem-solving methodology requires students to develop evaluation criteria, generate design ideas and develop and justify preferred designs.

Students demonstrate evidence of their design ideas through the generation of two or three design ideas for their proposed infographics and/or dynamic data visualisations. Students use ideation tools that include mood boards, brainstorming, mind maps, sketches and annotations to create the design ideas.

These design ideas should be broad in nature and take into consideration the appearance of the infographics or the appearance and functionality of the dynamic data visualisations.

Students are required to develop evaluation criteria that will be used to evaluate their design ideas and in Unit 4 Outcome 1, their proposed infographics and/or dynamic data visualisations. Evaluation criteria for design ideas can include the appearance of the proposed infographics and the appearance and functionality of proposed dynamic data visualisations. The evaluation criteria should reference the functional and non-functional requirements in the analysis and the effectiveness of the proposed infographics and/or dynamic data visualisations. The evaluation criteria are used to determine and justify which of the design ideas should be further developed into detailed designs.

The detailed designs should be representative of how the proposed infographics and/or dynamic data visualisations will appear or function. Examples of design tools for generating detailed designs may include storyboards, mock-ups, IPO charts and query designs. Detailed designs should be completed with annotations, explanations and justifications. Students are also to consider the design principles that influence the design of the infographics and/or dynamic data visualisations.

Below shows the process by which design ideas are refined by the evaluation criteria to become detailed designs:

Figure 2: Process for designing infographics and/or dynamic data visualisations

Image description

The activities in this area of study need to be managed by the student as part of their project plan, which requires the use of monitoring their progress in order to remain on track with meeting all the identified milestones throughout the School-assessed Task.

If the detailed designs generated and assessed in Unit 3 Area of Study 2 is incomplete or contains significant errors, students have the opportunity to make adjustments to their designs.

Teachers can provide feedback on the quality of the designs but the adjustments must be student initiated, not teacher directed. The modified designs are not reassessed. However, this opportunity prevents negative consequential effects in the development stage in Unit 4 Outcome 1.

Unit 4: Area of Study 1 – Data analytics: development and evaluation

The second part of the SAT requires students to develop and evaluate the infographics and/or dynamic data visualisations to present the findings in response to the research question and assess the effectiveness of the project plan in monitoring progress.

Students are required to monitor, modify and annotate their project plan as they progress through the development and evaluation stages of the problem-solving methodology. This can include making adjustments to tasks, time allocations, as well as recording details in journals or work logs. Attention to detail throughout this process will enable students to better assess the effectiveness of the project plan in monitoring progress.

The development stage of the problem-solving methodology requires students to develop their detailed designs from Unit 3 Outcome 2 into infographics and/or dynamic data visualisations.

In order to develop their infographics and/or dynamic data visualisations, students are required to use appropriate software tools to manipulate data that meets the prescribed list of software tools and functions, and outcome-specific requirements of the study. Students should ensure that the data they have collected is in an appropriate format for visualisation and adjust it if necessary. Students should also refine their findings by analysing their data.

Techniques for analysing data to refine findings for data visualisations are to include:

- descriptive statistics (average, median, minimum, maximum, range, standard deviation, count/frequency, sum)

- Pearson’s correlation co-efficient

(r)

- the shape and skew of data.

Appropriate functions, techniques and procedures for the selected software tools are to be used, along with techniques for creating the infographics and/or dynamic data visualisations and techniques for validating and verifying data. Students may choose to create either infographics and/or dynamic data visualisations. They may choose the option to create both data visualisation types, but are not required to create both.

There are a range of methods and techniques that students can use to achieve this requirement using specialised software.

As part of their development activities, students should robustly test their database, spreadsheet and infographics and/or dynamic data visualisations solutions to fully ensure that they meet the requirements of the area of study.

Functionality and validation testing are expected to be documented using testing tables. Testing tables are to include both expected and actual results, along with clear descriptions of the test to be performed and the test data to use in each test. Students should be encouraged to include in their documentation all tests that have failed, including actions and mitigations taken, to demonstrate the changes made because of their robust testing process.

Students should use their evaluation criteria developed in Unit 3 Outcome 2 when evaluating the efficiency and effectiveness of their infographics and/or dynamic data visualisations. The proposed evaluation strategy should evaluate the extent to which the infographics and/or dynamic data visualisations present the findings of the research question.

Students can complete this evaluation in a variety of ways:

- using a rubric and a comment section

- using a checklist incorporating functional and non-functional requirements

- writing a short report that includes comments on each criteria evaluated.

Throughout the SAT process, students should be collecting evidence to support the assessment of the project plan in managing the project. While not an exhaustive list, this evidence may take the form of progress journals, annotations to the project plan, screenshots of infographics and/or dynamic data visualisations, photographs of design iterations and annotated drafts of diagrams.

This evidence can then be collated and presented in the form of a written report or an annotated visual plan.

Unit 3 Data analytics

Sample approaches to developing an assessment task

Area of Study 1

On completion of this unit the student should be able to interpret teacher-provided solution requirements and designs, extract data from large repositories, manipulate and cleanse data, conduct statistical analysis and develop data visualisations to display findings.

The

VCE Applied Computing Study Design pages 37–39 provides details of the key knowledge and key skills related to Unit 3 Outcome 1 and the corresponding area of study, Data analytics.

Teachers should be familiar with the area of study and outcome statement, relevant key knowledge and key skills in order to plan for the assessment task.

Teaching and learning activities should be selected to enable students to demonstrate their understanding of the key knowledge and key skills. These activities should include a range of theoretical and practical activities to develop and extend student knowledge. Sample

teaching and learning activities are included in the

Support material.

The

VCE Applied Computing Study Design (page 43) provides details of the school-based assessment required to be undertaken to demonstrate the key knowledge and key skills related to Unit 3 Outcome 1 and the corresponding area of study. The assessment details are outlined below:

- In response to teacher-provided solution requirements and designs:

- extract and reference data from large repositories into a database

- query data using databases and SQL

- use spreadsheet functions to manipulate data

- statistically analyse data in spreadsheets

- develop data visualisations.

- Task time allocated should be at least 6–10 lessons.

The assessment task must directly assess the student’s understanding of the key knowledge and key skills as well as their ability to apply these to the assessment task.

Students should be given instructions regarding the requirements of the task, including time allocation, format of student responses and the marking scheme/assessment criteria. The marking scheme/assessment criteria used to assess the student’s level of performance should reflect the VCAA performance descriptors for Unit 3 Outcome 1.

Teachers should plan to provide requirements and designs to extract data from large repositories, link to database and spreadsheet software tools, conduct statistical analysis and present the findings from this analysis by developing data visualisation solutions. These activities should vary in length and difficulty, providing students with sufficient opportunities to demonstrate their knowledge and to meet the requirements of the outcome. Teachers should also note that the study design (page 43) outlines the requirements of the assessment task.

Teachers can choose how to deliver this outcome, either by teaching towards and assessing each component of the task one at a time or conducting the assessment task in one block of time after teaching. While due dates for assessment are school-based decisions, teachers should note that the study design specifies the overall time allocated to the task as 6–10 lessons in duration.

The approach that teachers employ to develop the Unit 3 Outcome 1 assessment task may follow the steps below:

- Select the key knowledge and key skills to determine the content to be used in each component of the assessment task. Consider the performance descriptors and the prescribed list of software tools and functions, and outcome-specific requirements as per the Study Design page 38 and 39.

- Determine a scenario or small case study that each activity will address. Teachers could use an overarching case study or tasks (containing a distinct set of requirements and designs for each task).

- For the overall case study/scenario, or each task:

- Draft some solution requirements and constraints that could be provided to students based on the content decided in steps 1 and 2 above.

- Develop designs that will be incorporated into the scenario provided to students. Use a range of designs based on the key knowledge. Students should have the opportunity to interpret each of the listed design tools.

- Ensure that the scenario, requirements and designs are consistent with each other and refine as required.

- After developing the scenario, check that the entire task is accessible and provides students with sufficient opportunities to demonstrate their ability to meet the requirements of the outcome. The VCAA performance descriptors provide guidance.

- Teachers should first complete the assessment task themselves by extracting data from the data source, developing and importing data into the database, creating queries and extracting data to a spreadsheet to be analysed and develop data visualisations. This will assist them to understand how students may respond to the solution requirements and designs. It will further ensure that time allocation and marking schemes are appropriate for the task being developed. Refinements to the task may occur as a result of this process.

If schools use commercially produced tasks as stimulus material when developing assessment tasks, they should be significantly modified in terms of both context and content to ensure the authentication of student work. Changing the name of a person or organisation mentioned in a case study/scenario is not considered to be significantly modified. Keeping assessment tasks identical except for the years included in the criteria of a query is not considered to be significantly modified. Similar tasks should not be used in future years without modification of context and content. New tasks should be created each year.

The teacher should decide the most appropriate time and the conditions for conducting this assessment task and inform the students ahead of the date. This decision is a result of several considerations including:

- the estimated time it will take to teach the key knowledge and key skills for the outcome

- the likely length of time required for students to complete the task considering the study design indicates the entire task should be conducted across 6–10 lessons

- the classroom environment the assessment task will be completed in

- whether the assessment task will be completed under open-book or closed-book conditions

- any additional resources required by students

- when tasks are being conducted in other subjects and the workload implications for students.

The performance descriptors in the

Support material give a clear indication of the characteristics and content that should be apparent in a student response at each level of achievement from very low to very high. The specified assessment task is listed on page 43 of the

study design. The assessment task is to be out of 100 marks.

Teachers may develop their own marking schemes for this outcome, provided they are consistent with the VCAA performance descriptors for Unit 3 Outcome 1. To be consistent with the performance descriptors, teacher-generated schemes must take into account the following points:

- interpretation of solution requirements and designs to develop databases, spreadsheets and data visualisations

- identification, selection, extraction and validation of data from large repositories of data using database software

- use of the APA referencing system

- manipulation and cleansing of data using spreadsheet software

- statistical analysis of the data to identify trends, relationships and patterns

- selection, application and justification of formats and conventions to create effective data visualisations, and

- development and application of suitable testing techniques for software tools used.

Teacher judgement should be used to determine the weighting of each criterion within each task and the overall weighting of each task as part of the wider SAC task. While weightings are not explicit within the VCAA performance descriptors, teachers must also understand that the criteria are not intended to be equally weighted.

Unit 4 Data analytics

Sample approaches to developing an assessment task

Area of Study 2

On completion of this unit the student should be able to respond to a teacher-provided case study to analyse the impact of a data breach on an organisation, identify and evaluate threats, evaluate current security strategies and make recommendations to improve security strategies.

The

VCE Applied Computing Study Design (pages 46–48) provides details of the key knowledge and key skills related to Unit 4 Outcome 2 and the corresponding area of study, Cyber security: data security.

Teachers should be familiar with the area of study and outcome statement, relevant key knowledge and key skills in order to plan for the assessment task.

Teaching and learning activities should be selected to enable students to demonstrate their understanding of the key knowledge and key skills. These activities should include a range of theoretical and practical activities to develop and extend student knowledge. Sample

teaching and learning activities are included in the

Support material.

The VCE Applied Computing Study Design (page 50) provides details of the school-based assessment required to be undertaken to demonstrate the key knowledge and key skills related to Unit 4 Outcome 2. The assessment details are outlined below:

-

Task: The case study scenario needs to enable:

- an analysis of the breach

- an evaluation of the threats

- recommendations to improve security strategies.

-

Format: The student’s performance will be assessed using one of the following:

- structured questions

- a report in written format

- a report in multimedia format.

-

Task time: 100–120 minutes.

The assessment task must directly assess the student’s understanding of the key knowledge and key skills as well as their ability to apply these to the assessment task.

Students should be given instructions regarding the requirements of the task, including time allocation, format of student responses and the marking scheme/assessment criteria. The marking scheme/assessment criteria used to assess the student’s level of performance should reflect the VCAA performance descriptors for Unit 4 Outcome 2.

Teachers should plan to provide a case study that includes the example of a data breach impacting an organisation and individuals, providing students with sufficient opportunities to demonstrate their knowledge and to meet the requirements of the outcome.

The approach that teachers employ to develop the Unit 4 Outcome 2 assessment task may follow the steps below:

- Consider the assessment tasks stated in the VCE Applied Computing Study Design on page 50.

- Select the key knowledge and key skills to determine the content to be used in each component of the assessment task. Consider the performance descriptors.

- Based on the selected approach, draft prompts that relate to the selected key knowledge and key skills.

- For the development of a case study, consider a fictitious organisation but one that is a real-world example with a reasonable level of complexity. Media articles can assist with this.

- Write the case study for the organisation. Key content within the case study should be based on the key knowledge and key skills.

- A case study for this task needs to refer to an organisation, which has security strategies that can be analysed and discussed, along with a data breach for which impact can be identified and evaluated.

- In order for students to evaluate the effectiveness of the organisation’s data and information security strategies, the case study needs to include strategies for each of the following: confidentiality, integrity and availability.

- In order to create an environment where students can assess threats, consider the types of threats in the key knowledge for this outcome: accidental, deliberate and events-based. There should be some references to each of these types of threats impacting the organisation.

- Students should be able to clearly identify the relevant legislation impacting the case study. This could be the

Privacy Act 1988 (Cwlth),

Privacy Amendment (Notifiable Data Breaches) Act 2017 (Cwlth),

Health Records Act 2001 (Vic) or

Privacy and Data Protection Act 2014 (Vic). Students could be given information on the type and extent of the data breach, the type of organisation (governmental or private), the amount the organisation earns each year, the location of the organisation and whether the organisation is involved in sharing personal or health data.

- The organisation needs to have a disaster recovery plan described so it can be evaluated by students.

- When the case study has been written, refine and finalise the structured questions or prompts based on the case study.

- Teachers should first complete the assessment task themselves by writing the solutions to the questions or prompts using only the case study. This will assist them to understand how students may respond to the case study. It will further ensure that time allocation and marking schemes are appropriate for the task being conducted. Refinements to the task may occur as a result of this process.

If schools use commercially produced tasks as stimulus material when developing assessment tasks, they should be significantly modified in terms of both context and content to ensure the authentication of student work. Changing the name of a person or organisation mentioned in a case study is not considered to be significantly modified. Similar tasks should not be used in future years without modification of context and content. New tasks should be created each year.

The teacher must decide the most appropriate time and conditions for conducting this assessment task and inform the students ahead of the date. This decision is a result of several considerations including:

- the estimated time it will take to teach the key knowledge and key skills for the outcome

- the likely length of time required for students to complete the task (considering the study design (page 50) outlines 100–120 minutes)

- the classroom environment the assessment task will be completed in

- whether the assessment task will be completed under open-book or closed-book conditions

- any additional resources required by students

- when tasks are being conducted in other subjects and the workload implications for students.

The performance descriptors give a clear indication of the characteristics and content that should be apparent in a student response at each level of achievement from very low to very high. The specified assessment task is listed on page 50 of the study design. The assessment task is to be out of 100 marks.

Teachers may develop their own marking schemes for this outcome, provided they are consistent with the VCAA performance descriptors for

Unit 4 Outcome 2. To be consistent with the performance descriptors, teacher-generated schemes must take into account the following points:

- analysis and description of a data breach and its impact to the organisation

- identification and evaluation of the threats to the security of data and information

- examination and description of organisational data and information security strategies

- proposal and application of evaluation criteria to evaluate the effectiveness of current data and information security strategies

- identification and discussion of legal and ethical consequences of ineffective data and information security strategies

- evaluation of the organisation’s disaster recovery plan

- recommendations and justifications of improvements to current security strategies.

Teacher judgment should be used to determine the weighting of each criterion within the SAC task. While weightings are not explicit within the VCAA performance descriptors, teachers must understand that the criteria are not intended to be equally weighted.

Units 3 and 4: Software development – School-assessed Task

When designing learning activities for the School-assessed Task, teachers will refer to the problem-solving methodology specifications, and the areas of study and outcomes, including key knowledge and key skills, as listed in the VCE Applied Computing Study Design.

The following table gives a breakdown of the four stages of the problem-solving methodology for the School-assessed Task.

Unit 3 Outcome 2

| Unit 4 Outcome 1

|

|---|

|

Analysis |

Design |

Development |

Evaluation |

Project management - Create, monitor and modify project plans (Gantt chart)

|

Project management - Monitor, modify and annotate project plans

- Assess the effectiveness of the project plan

|

Analysis - Document a problem,

need or opportunity

- Collect data

- Apply analysis tools

- Determine solution

requirements,

constraints and scope

- Document a software

requirements specification

|

Design - Generate design ideas

- Develop evaluation

criteria - Produce detailed designs

|

Development - Develop a software solution

- Write internal documentation

- Use appropriate data types, data structures and data sources

- Apply suitable naming conventions

- Apply validation techniques

- Apply debugging and alpha testing techniques

- Conduct beta testing

|

Evaluation - Evaluate the efficiency and effectiveness of the software solution

|

The Software development School-assessed Task is in two parts involving Unit 3 Outcome 2 and Unit 4 Outcome 1.

The School-assessed Task contributes 30 per cent to the study score for Software Development. Details of the assessment task can be found on page 67 of the

VCE Applied Computing Study Design.

Teachers must be aware of the current

VCE Applied Computing: Software Development Administrative information for School-based Assessment. This document contains assessment information on the nature and scope of the task, mandated assessment rubrics and authentication information, including forms and School-based Assessment Task assessment sheets for scores. Teachers are reminded of the need to comply with the authentication requirements specified in the Assessment: School-based Assessment section of the current

VCE Administrative Handbook. This is important to ensure that undue assistance is not provided to students undertaking assessment tasks.

Teachers must plan and use observations of student work in order to monitor and record each student’s progress as part of the authentication process. A record of these details are to be included on the Authentication record form in the VCE Applied Computing: Software Development: Administrative information for School-based Assessment.

The VCAA conducts professional learning for teachers of Software Development in February and March of each year through webinars. These are known as SAT Webinars. Details of these professional learning sessions are advertised in the November edition of the VCAA Bulletin each year.

Unit 3: Area of Study 2 – Software development: analysis and design

The first part of the SAT requires students to identify a problem, need or opportunity that can be developed as a software solution. Students may reach out to friends, relatives, local businesses or community groups to determine the problem, need or opportunity that requires a solution. Doing this provides them with an authentic client from whom to seek feedback and clarification throughout the process.

Regardless of how the teacher plans to deliver the learning program for Unit 3 Outcome 2, students should be informed of the structure of the SAT as early in the school year as possible. The purpose of doing so is that students can spend time finding an authentic problem, need or opportunity to focus on for their assessment.

Teachers should not provide individual students or classes with needs or opportunities; students must generate their needs or opportunities themselves.

At this stage, students should be starting to document their thinking to ensure that they have thought the entire SAT process through.

Teachers should establish and communicate a school-based process whereby students discuss their SAT idea/s with the teacher. The purpose of this process is to:

- ensure that the software solution can be feasibly completed within the timeframe available

- ensure that the scope of the proposed software solution is feasible in relation to the student’s demonstrated programming skills.

The teacher may choose to engage a colleague, learning area leader, VCE coordinator, deputy/assistant principal in the process if they choose (for purposes of transparency and fairness).

Solution brief

Once endorsed to proceed, students are then required to prepare a brief for their teacher that outlines the proposed direction they wish to take in relation to their SAT across Unit 3 Outcome 2 and Unit 4 Outcome 1. The following content should be included in the brief:

- An explanation of the problem, need or opportunity, and how the proposed software solution will aim to address the situation

- A brief description of the intended users or clients

- The programming language(s) to be used in the development of the software solution*

- A brief description of how the solution will use relevant features of a programming language from the software tools and functions and outcome-specific requirements document.

*Note: If the programming language(s) differs from the language(s) being used for the learning and assessment taking place as part of Unit 3 Outcome 1, the teacher should confirm that the student is proficient in the language before approving the focus of the SAT.

Students will produce a project plan (Gantt chart) that outlines the tasks, sequencing, time allocation, dependencies, milestones and the critical path. They will follow the project plan to develop their software solution to their identified need or opportunity. The project plan takes into consideration all stages and activities of the problem-solving methodology covered in Unit 3 Outcome 2 and Unit 4 Outcome 1.

Once the project plan has been developed it will be monitored and modified throughout the entire project. Students do not have to use dedicated project-management software in the development of their project plan.

Below is a sample of a project plan.

The analysis stage of the problem-solving methodology requires students to:

- collect and analyse data

- use analytical tools to depict relationships between users, data and systems

- produce a software requirements specification.

Data collection

Students are required to collect data that will inform the analysis of their need or opportunity. A range of data collection techniques should be used, including interviews, observation, reports and surveys. The data collected must provide students with sufficient information to determine requirements, constraints and scope, user requirements and the proposed technical environment that the solution will operate within.

Analytical tools

Students use analytical tools and techniques to diagrammatically depict the relationships between data, users and the digital systems that will involve the software solution.

Analytical tools include context diagrams (Level 0), data flow diagrams (Level 1) and use case diagrams. Students should ensure that their diagrams are consistent with their data collection in relation to the current system or manual processes. The analytical tools produced should be included in the software requirements specification.

Below are some simple representations of a context diagram, data flow diagram and use case diagram.

A software requirements specification (SRS) document is then developed by students to clearly outline the proposed software solution to their identified problem, need or opportunity. The SRS is a formal document that details the purpose of the software solution and contains information that will be used to support the design of the software solution in Unit 3 Outcome 2, and the development of the software solution in Unit 4 Outcome 1.

The presentation of the SRS should include the following content:

- the identified problem, need or opportunity

- functional requirements

- non-functional requirements

- constraints

- scope

- user characteristics (general characteristics of the proposed users for the software solution)

- technical environment (description of the environment in which the software solution will operate)

- analytical tools depicting existing processes and systems

- context diagrams

- data flow diagrams

- use case diagrams.

The design stage of the problem-solving methodology requires students to:

- generate a range of design ideas

- develop evaluation criteria

- develop and justify detailed designs.

Design ideas

Students generate and document a range of design ideas for their proposed software solution. These design ideas should be broad in nature, and consider both the functionality and appearance of the proposed solution. Students may consider the user experience and design principles that influence the design on their software solution within their design ideas. Examples of design ideas may include mood boards, brainstorms, mind maps, sketches and the use of annotations. At this point of the design stage, teachers should encourage students to seek feedback on their design ideas from their client or potential users.

Evaluation criteria

Students are required to develop criteria that will be used to evaluate their design ideas and, in Unit 4 Outcome 1, the software solution. Evaluation criteria for design ideas should support the student in considering how each of the design ideas link to the requirements outlined in the SRS and the proposed software solution. Evaluation criteria for the software solution should consider whether the requirements outlined in the SRS were met and the efficiency and effectiveness of the software solution.

Detailed designs

After receiving feedback from the client or potential users and evaluating the design ideas against the criteria, students develop their design ideas into a detailed design for the proposed software solution. The detailed designs should be representative of how they intend the proposed software solution will function and appear. When generating the detailed designs, students should also incorporate characteristics of user experience and design principles that influence appearance and functionality. Examples of detailed designs that may be included are data dictionaries, mock-ups (with explanations and justifications), object descriptions, IPO charts, and pseudocode. Feedback from the client may again be sought by students at this point.

Below is an example of the process for ideating and developing detailed designs.

The activities in this area of study need to be managed by the student as part of their project plan, which requires monitoring their progress in order to remain on track with meeting all the identified milestones throughout the School-assessed Task.

If the analytical tools, SRS, or detailed design produced and assessed in Unit 3 Area of Study 2 are incomplete or contain significant errors, students have the opportunity to make adjustments to their analysis and/or design. However, the updated component/s cannot be reassessed.

Teachers can provide feedback on the quality of the analysis or designs but the adjustments must be initiated by the student and not directed by the teacher. While the modified analysis and/or design is not reassessed, this opportunity prevents negative consequential effects in the development stage of Unit 4 Outcome 1.

Unit 4: Area of Study 1 – Software development: development and evaluation

The second part of the SAT requires students to develop and evaluate the software solution in line with the software requirements specification and detailed designs in Unit 3 Outcome 2. The software solution is tested to ensure it meets requirements and is usable. Students then evaluate the efficiency and effectiveness of the software solution and assess the effectiveness of the project plan.

Students are required to monitor, modify and annotate their project plan as they progress through the development and evaluation stages of the problem-solving methodology. This can include making adjustments to tasks, time allocations and recording details in journals or work logs. Attention to detail throughout this process will enable students to better assess the effectiveness of the project plan.

The development stage of the problem-solving methodology requires students to develop their detailed designs from Unit 3 Outcome 2 into a software solution.

In order to develop their software solution, students are required to use an appropriate object-oriented programming language that meets the software tools and functions, and outcome-specific requirements of the study. Students may choose to develop their software solution using an alternative object-oriented programming language to that studied in Unit 3 Outcome 1. However, teachers must consider the following before supporting this approach:

- whether the proposed alternative object-oriented programming language meets the programming requirements of the study

- students can demonstrate proficiency with the proposed object-oriented programming language (to the same level demonstrated in Unit 3 Outcome 1)

- ability for the teacher to support the student’s use of the proposed object-oriented programming language

- ability for the teacher to interpret the code documented using the proposed object-oriented programming language.

Teachers should document this as part of the authentication process.

The student software solution should include:

- appropriate features of the selected object-oriented programming language

- suitable data types, data structures and data sources

- validation techniques

- internal documentation of code.

Students should robustly test their software solution to fully ensure that it meets requirements as expected. Debugging and alpha testing are to be conducted and documented (using testing tables) throughout the development of the solution. Testing is to include the creation of test cases and the use of relevant test data. Testing tables are to include both expected and actual results along with clear descriptions of the test to be performed and the test data to use in each test. Students should be encouraged to include in their documentation all tests that have failed, including actions and mitigations taken, to demonstrate the changes made as a result of a robust testing process, as well as support the authentication of their work.

Students are required to design, conduct and document beta tests that are to be conducted with two or more potential ‘users’ of the software solution. Potential ‘users’ could include the actual clients who will benefit from the development of the software solution or students acting as real users of the software solution. Beta testing could be conducted through observing or surveying of users interacting with the software solution. Results captured should be documented in order to identify errors and issues.

Based on the results from beta testing, students then make recommendations for how the software solution could be improved, focusing on the appearance and behaviour of the solution.

The evaluation stage of the problem-solving methodology requires students to:

- develop an evaluation strategy

- evaluate the efficiency and effectiveness of the software solution

- assess the effectiveness of the project plan in managing the project.

The proposed evaluation strategy for the software solution should assume the implementation of their software solution with their client because actual implementation is not always practically feasible for this task, and outside the scope of this assessment. The strategy should consider how each of the criteria developed in Unit 3 Outcome 2 will be evaluated and the evidence required to be collected to evaluate them. The evaluation criteria focus on the efficiency and effectiveness of the software solution.

Throughout the SAT process, students should be collecting evidence to support the assessment of the project plan in managing the project. While not an exhaustive list, this evidence may be in the form of progress journals, annotations to the project plan, photographs of design iterations, annotated drafts of diagrams, annotated code samples, screenshots and feedback from users during beta testing.

This evidence can then be collated and presented in the form of a written report or an annotated visual plan.

Unit 3 Software development

Sample approaches to developing an assessment task

Area of Study 1

On completion of this unit the student should be able to interpret teacher-provided solution requirements and designs and use appropriate features of an object-oriented programming language to develop working software modules.

The

VCE Applied Computing Study Design (pages 53–55) provides details of the key knowledge and key skills related to Unit 3 Outcome 1 and the corresponding area of study, Software development: programming.

Teachers should be familiar with the area of study and outcome statement, relevant key knowledge and key skills in order to plan for the assessment task.

Teaching and learning activities should be selected to enable students to demonstrate their understanding of the key knowledge and key skills. These activities should include a range of theoretical and practical activities to develop and extend student knowledge. Sample

teaching and learning activities are included in the

Support material.

The VCE Applied Computing Study Design (page 60) provides details of the school-based assessment required to be undertaken to demonstrate the key knowledge and key skills related to Unit 3 Outcome 1. The assessment details are outlined below:

- In response to teacher-provided solution requirements and designs, develop four working modules with increasing complexity of programming skills.

- Module 1: Simple calculations using arithmetic, logical and conditional operators

- Module 2: Reading and writing files

- Module 3: Sorting and searching with functions or methods

- Module 4: Classes and objects

- At least two modules must include a GUI, and all modules must include testing.

- Task time allocated should be at least 8–14 lessons.

The assessment task must directly assess the student’s understanding of the key knowledge and key skills as well as their ability to apply these to the assessment task.

Students should be given instructions regarding the requirements of the task, including time allocation, format of student responses and the marking scheme/assessment criteria. The marking scheme/assessment criteria used to assess the student’s level of performance should reflect the VCAA performance descriptors for Unit 3 Outcome 1.

Teachers should plan to provide requirements and designs for four modules as outlined in the Study Design (page 60). These modules should vary in length and difficulty, providing students with sufficient opportunities to demonstrate their knowledge and to meet the requirements of the outcome. Teachers are reminded that software modules are intended to be small programs that may or may not form part of a larger software solution.

Teachers can choose how to deliver this outcome, either by teaching towards and assessing each module one at a time or conducting the assessment task in one block of time after teaching. While due dates for assessment are school-based decisions, teachers should note that the study design specifies the overall time allocated to the task as 8–14 lessons in duration.

The approach that teachers employ to develop the Unit 3 Outcome 1 assessment task may follow the steps below.

- Select the key knowledge and key skills to determine the required content and design tools to be used within each software module. Consider the

performance descriptors and

software tools and functions, and outcome-specific requirements document.

- Determine a scenario or case study that each software module will address. Teachers could use an overarching case study across all four software modules (with distinct sets of requirements and designs for each software module) or alternatively, brief individual scenarios could be developed for each software module.

- For each software module:

- Draft some functional and non-functional requirements that could be provided to students based on the content determined in steps 1 and 2 above.

- Develop designs that will be incorporated with the scenario provided to students. Students should have an opportunity to interpret each of the listed design tools across each of the four software modules.

- Ensure that the scenario, requirements and designs are consistent with each other and refine as required.

- After developing the scenario, check that the entire task is accessible and provides students with sufficient opportunities to demonstrate their ability to meet the requirements of the outcome. The VCAA performance descriptors provide guidance.

- Teachers should first complete the assessment task themselves. This will assist them to understand how students may respond to the solution requirements and designs. It will further ensure that time allocation and marking schemes are appropriate for the task being developed. Refinements to the task may occur as a result of this process.

If schools use commercially produced tasks as stimulus material when developing assessment tasks, they should be significantly modified in terms of both context and content to ensure the authentication of student work. Changing the name of a person or organisation mentioned in a case study/scenario is not considered to be significantly modified. New tasks should be created each year. Similar tasks should not be used in future years without modification of context and content.

The teacher must decide the most appropriate time and conditions for conducting this assessment task and inform the students ahead of the date. This decision is a result of several considerations including:

- the estimated time it will take to teach the key knowledge and key skills for the outcome

- the likely length of time required for students to complete each software module, considering the study design indicates the entire task should be conducted across 8–14 lessons

- the classroom environment the assessment task will be completed in

- whether the assessment task will be completed under open-book or closed-book conditions

- any additional resources required by students

- when tasks are being conducted in other subjects and the workload implications for students.

The VCAA performance descriptors in the

Support material give a clear indication of the characteristics and content that should be apparent in a student response at each level of achievement from very low to very high. The specified assessment task is listed on page 60 of the

study design. The assessment task is to be out of 100 marks.

Teachers may develop their own marking schemes for this outcome, provided they are consistent with the VCAA performance descriptors for Unit 3 Outcome 1. To be consistent with the performance descriptors, teacher-generated schemes must take into account the following points:

- interpretation of solution requirements and designs

- selection of relevant data types, data structures and data sources

- use and justification of appropriate features of an OOP language

- development and application of suitable naming conventions and validation techniques

- document the functioning of modules using internal documentation

- development and application of suitable debugging and testing techniques.

Teacher judgment should be used to determine the weighting of each criterion within each software module and the overall weighting of each software module as part of the wider SAC task. While weightings are not explicit within the VCAA performance descriptors, teachers must also understand that the criteria are not intended to be equally weighted.

Unit 4 Software development

Sample approaches to developing an assessment task

Area of Study 2

On completion of this unit the student should be able to respond to a teacher-provided case study to analyse an organisation’s software development practices, identify and evaluate current security controls and threats to software development practices, and make recommendations to improve practices.

The

VCE Applied Computing Study Design (pages 63–65) provides details of the key knowledge and key skills related to Unit 4 Outcome 2 and the corresponding area of study, Cyber security: secure software development practices.

Teachers should be familiar with the area of study and outcome statement, relevant key knowledge and key skills in order to plan for the assessment task.

Teaching and learning activities should be selected to enable students to demonstrate their understanding of the key knowledge and key skills. These activities should include a range of theoretical and practical activities to develop and extend student knowledge. Sample

teaching and learning activities are included in the

Support material.

The VCE Applied Computing Study Design (page 66) provides details of the school-based assessment required to be undertaken to demonstrate the key knowledge and key skills related to Unit 4 Outcome 2. The assessment details are outlined below:

-

Task: The case study scenario needs to enable:

- an analysis of the organisation’s software development practices

- an evaluation of the current security controls and threats

- recommendations to improve practices.

-

Format: The student’s performance will be assessed using one of the following:

- structured questions

- a report in written format

- a report in multimedia format.

-

Task time: 100 – 120 minutes .

The assessment task must directly assess the student’s understanding of the key knowledge and key skills as well as their ability to apply these to the assessment task.

Students should be given instructions regarding the requirements of the task, including time allocation, format of student responses and the marking scheme/assessment criteria. The marking scheme/assessment criteria used to assess the student’s level of performance should reflect the VCAA performance descriptors and the Key Skills for Unit 4 Outcome 2.

Regardless of the assessment task type selected by the classroom teacher, all tasks require the creation of a case study. Each task type requires students to apply their knowledge to the given context.

The approach that teachers employ to develop their assessment task may follow the steps below.

- Consider the assessment task stated in the VCE Applied Computing Study Design on page 66.

- Select the key knowledge and key skills to determine the required content to be used. Consider the performance descriptors for Unit 4 Outcome 2.

- Based on the selected approach, draft prompts that relate to the selected key skills and performance descriptors.

- For the development of a case study, consider a fictitious organisation but one that is based on a real-world example with a reasonable level of complexity. Media articles can assist with this.

- Write the case study for the organisation. Key content within the case study should be based on the targeted key knowledge and key skills selected.

- A case study for this task needs to refer to a medium to large organisation, which is currently developing software in-house.

Regardless of context chosen, the organisation will have some secure software development practices in place that can be analysed and discussed.

- In order for students to evaluate the effectiveness of an organisation’s secure software development practices, the case study needs to consider how vulnerabilities may reduce the security of development practices and pose risk to the data being stored within the software being developed. The organisation should have some weaknesses in these areas allowing for students to recommend improvements.

- In order to create an environment where students can assess threats, consider the types of vulnerabilities in the key knowledge for this outcome: use of application programming interfaces (APIs), malware, unpatched software, poor identity and access management practices, man-in-the-middle attacks, insider threats, cyber security incidents, software acquired from third parties, ineffective code review practices, and combined environments (development, testing and production). There is no requirement for teachers to include all of these vulnerabilities within the case study; however, there should be clear references to the types of vulnerabilities selected by the teacher.

- Students should be able to clearly identify the relevant legislation impacting the organisation(s) described within the case study. This could be the

Copyright Act 1968, and the relevant listed parts of the

Privacy Act 1988, Privacy and Data Protection Act 2014. Therefore, students could be given information on the type of organisation, the amount the organisation earns each year, the location of the organisation, whether it is a government or private organisation, and how the ineffective practices may be impacted by the relevant legislation. Industry frameworks

(Essential Eight and Information Security Manual) could be used by students to identify potential issues with the current practices as well as support recommendations for improvement.

- When the case study has been written, refine and finalise the structured questions or prompts based on the case study.

- Teachers should first complete the assessment task themselves by writing the solutions to the questions or prompts using only the case study. This will assist them to understand how students may respond to the case study. It will further ensure that time allocation and marking schemes are appropriate for the task being conducted. Refinements to the task may occur as a result of this process.

If schools use commercially produced tasks as stimulus material when developing assessment tasks, they should be significantly modified in terms of both context and content to ensure the authentication of student work. Changing the name of a person or organisation mentioned in a case study is not considered to be significantly modified.

Similar tasks should not be used in future years without modification of context and content. New tasks should be created each year.

The teacher must decide the most appropriate time and conditions for conducting this assessment task and inform the students ahead of the date. This decision is a result of several considerations including:

- the estimated time it will take to teach the key knowledge and key skills for the outcome

- the likely length of time required for students to complete the task (considering the study design (page 66) outlines 100–120 minutes)

- the classroom environment the assessment task will be completed in

- whether the assessment task will be completed under open-book or closed-book conditions

- any additional resources required by students

- when tasks are being conducted in other subjects and the workload implications for students.

The performance descriptors in the give a clear indication of the characteristics and content that should be apparent in a student response at each level of achievement from very low to very high. The specified assessment task is listed on page 66 of the study design. The assessment task is to be out of 100 marks.

Teachers may develop their own marking schemes for this outcome, provided they are consistent with the performance descriptors and key skills for Unit 4 Outcome 2. To be consistent with the performance descriptors, teacher-generated schemes must take into account the following points:

- analysis and description of an organisation’s software development practices

- proposal and application of evaluation criteria to evaluate the effectiveness of current software development practices

- identification and description of vulnerabilities and risks based on current practice

- identification and discussion of legal and ethical consequences of insecure software development practices

- recommendation and justification of improvements to organisations and their development environments to enhance secure development practices.

Teacher judgement should be used to determine the weighting of each criterion within the SAC task. While weightings are not explicit within the VCAA performance descriptors, teachers must also understand that the criteria are not intended to be equally weighted.

The VCAA performance descriptors are advice only and provide a guide to developing an assessment tool when assessing the outcomes of each area of study. The performance descriptors can be adapted and customised by teachers in consideration of their context and cohort, and to complement existing assessment procedures in line with the

VCE Administrative Handbook and the

VCE assessment principles.

VCE assessment principles.

VCE performance descriptors can assist teachers in:

- moderating student work

- making consistent assessment

- helping determine student point of readiness (zone of proximal development)

- providing more detailed information for reporting purposes.

Using VCE performance descriptors can assist students by providing them with informed, detailed feedback and by showing them what improvement looks like.

Teachers can also explore the VCE performance descriptors with their students, unpacking the levels of expected performance so students have a clear understanding of what can be possible in terms of development and achievement.

When developing SAC tasks, teachers are advised to adapt the VCAA VCE performance descriptors to relate to the SAC task used and their school context. Teachers should use their professional judgment when deciding how to adapt the rubrics, considering the

VCE assessment principles the requirements of the relevant study design, the relevant outcome, key knowledge, key skills and assessment tasks, and the student cohort.

Teachers may consider using the following guidelines when adapting the VCE Performance descriptors and/or developing an assessment tool:

- Develop the SAC task and assessment rubric simultaneously.

- Assess the outcome through a representative sample of key knowledge and key skills. Not all key knowledge and key skills will be formally assessed in a SAC task – some key knowledge and key skills are observable in classroom engagement and learning – but all criteria in any assessment tool must be drawn directly from the study design.

- Select the components of the VCE Performance descriptors that are most appropriate and most relevant for the selected outcome and SAC task.

- Attempt to capture the skill level of a range of students within the cohort: the lowest expected quality of performance should be something most or all students can do, and the highest expected quality of performance should be something that extends the most able students. Similarly, ensure that the range of qualities identified in the rubric shows the lower and the upper range of what an individual student could show in terms of the outcome, key knowledge and the key skills.

- Where necessary, add specific key knowledge and/or key skills to provide context to the expected qualities of performance.

- Where necessary, remove expected qualities of performance that may not be relevant to the selected outcome and developed SAC task.

- Show a clear gradation across the expected qualities of performance, indicating progression from one quality to the next.

- Use consistent language from the study design outcome, key knowledge and key skills.

- Ensure command terms reflect the cognitive demands of the outcome. Refer to the

glossary of command terms for a list of terms commonly used across the Victorian Curriculum F–10, VCE study designs and VCE examinations.

2025 Administrative Information for School-based Assessment

VCE Data Analytics: Administrative Information for School-based Assessment in 2025

VCE Data Analytics: Administrative Information for School-based Assessment in 2025

VCE Software Development: Administrative Information for School-based Assessment in 2025

VCE Software Development: Administrative Information for School-based Assessment in 2025